I prepared an AI hackathon

Here's what I learned from it

Hello dear reader,

Sorry for going dark this last month, but I’m sure you’ll understand.

Between a full-time job, family duties, my own AI-related tinkering, Zelda: Tears of the Kingdom’s release, and the beautiful French weather we’ve had lately, it’s been hard to focus on writing. But I haven’t forgotten you 😉.

Last week I had the pleasure of helping host our company’s AI-oriented offsite. So just to get things back on track, I'd like to share some recent discoveries I've made through trial and error.

Disclaimer: AI tools were used for rephrasing some sentences and for generating the post’s title image

I changed my PoC setup

I’ve talked about my PoC setup for working with AI before:

But this time, I had to get 3 separate teams to iterate fast on topics I didn’t know in advance. So the stack required a couple of updates:

It needed an easy setup and deployment method

It had to provide the most extensive set of tools out of the box

I needed both frontend and backend on the same stack

With those in mind, I had to build it back from the ground up

I ditched Javascript to focus on Python

I like Javascript, okay? I know it’s the perfect maelstrom of pure chaos of what any language is and could be. But despite all of that, I actually love its quirks and whatnot.

And I’ve done wonders with it, be it on the backend or frontend. But when I started working with data on my AI projects, oh boy, what a disaster it was.

Javascript is great at many things but is terrible at providing fully-fledged frameworks and libraries. In 2020 there were already over 1.3 million packages on npm, but to this day, there is still no consensus on what the default backend framework for NodeJS even is. And it’s worse when working with data, a lot worse…

So the obvious choice was Python. And while I loathe its heavily object-oriented syntax without proper typings, the Python packages for working with data are just in a different league. I knew it was the language for data scientists, but I had no idea how far ahead it was from other languages.

Just a quick sample so you get my point. I’m building my own AI-assisted tools to monitor news on tech. One of the core features is the ability to send an article’s URL to feed into my database. In JS, I considered myself lucky with @extractus/article-extractor. But in Python, we’ve got Newspaper3k which, on top of essential data, gives you:

Summary extraction

Keywords extraction

Google trending terms extraction

And every data-related library is like this. Even Langchain is leagues better in Python than in JS. Just compare the amount of integrations between the two.

So, from now on, I’m sticking with Python on the backend side.

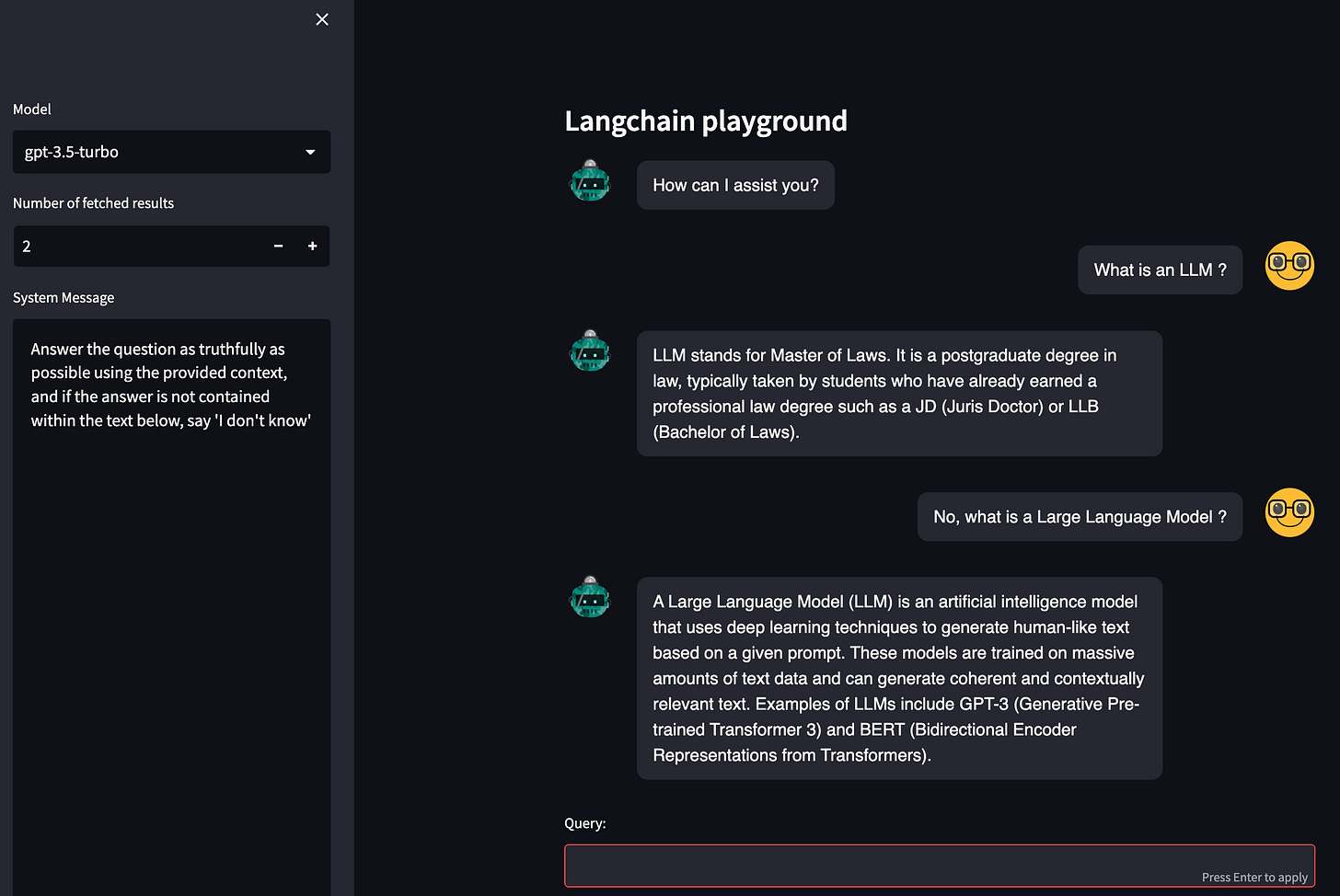

Langchain is great (kind of)

I just mentioned Langchain, and I have to discuss it briefly.

One thing Langchain does well is documenting use cases and making you feel powerful. Open up an integration, copy/paste the code, and voilà! It also thoroughly introduces the essential modules needed to effectively engage with an LLM.

Unfortunatunately, I can’t say the same about actually documenting the way it’s architectured and works inside. Going past the usual “getting started” felt incomprehensibly convoluted. I’m sure focusing on object-oriented instead of functional programming doesn’t help. Still, I had to implement each module myself without using it to finally get a good grasp of its inner workings.

However, due to its widespread use and numerous integrations, I had no choice but to use it as the primary framework for interacting with LLMs during the hackathon.

And it actually went pretty well. Of course, I split my template code into neatly named files to help my teammates understand and iterate quickly. But, since that experience, I’m considering using more of its parts in my mainline developments.

Streamlit is dope

(unless you want to go into production)

Being stuck with Python, I also needed a way for the devs to quickly iterate on features without spending hours on the frontend.

Fortunately, there is an awesome Python package for that called Streamlit. It allows you to build a pretty reactive frontend with a few lines of code. And even better, they offer their own push-to-prod hosting solution for the site you’ve made.

Here’s a preview of what I made with a dozen lines of code based on this article:

Regrettably, the downside of Streamlit is that while it’s neat for building demos, such as most of Hugginface’s model pages, the way it works makes it impossible to use to build a company’s product.

Security is a pain

Of the 3 PoCs presented at the end of the offsite, all were terrific demos, but absolutely none were safe:

One allowed GPT3.5 to respond, potentially putting the user in danger or engaging the company's liability without any validation.

Another ran generated SQL queries directly on our database, making it prone to SQL injections of the worst order (hello OWasp Top 10 👋).

The last one didn’t do any of those, but has anyone actually figured out how to prevent prompt injection?

And while most of these can be prevented one way or another, the solutions won’t come without some severe feature limitations.

So, for now, the most straightforward use cases are definitely internal tools because I’m scared to death of releasing a public AI feature using proprietary data. Good thing OWasp has been branching out to AI lately to help us out.

A quick chat on use cases

Security aside, I’m starting to notice a pattern emerge from the many tools and use cases I’ve encountered lately.

Dealing with chaos

LLMs are great at dealing with unstructured information: we tried giving statements in an unorganized order, often going back on our words, and as you can imagine, GPT-3.5 dealt well with parsing it, and GPT-4 did even better.

They are also getting good at structuring said information. But even with GPT-4 and several system prompt iterations, I’m still getting misses. So we’re still far from ditching the infamous maybeItsJsonButIfNotRetry function, but I’m hopeful for Anthropic’s Claude “constitutional AI”.

Killing the ant with a bazooka

Another trend I’m noticing is that because OpenAI’s GPT is so good at dealing with such varied topics and tasks, it’s likely way too powerful for most of these tasks.

One such example I have in mind is that while building my own AI-assisted knowledge base, I wanted to extract both summary and keywords. 2 tasks that, let me remind you, are included by default in Newspaper3k using much smaller models which can be installed along the rest of your backend.

Another consequence of heavily relying on top LLMs is that I become dependent on private models and constrained to using APIs which I’m not really a fan of.

So what’s next

Now that I’m starting to get a good feel for the main tools, I’m looking forward to trying out and integrating smaller models to deal with specific use cases.

And, of course, I’ll keep on sharing my discoveries. So, if you’re not subscribed already, it’s only one step away 😁